A cameraless photography story for St Valentine’s Day…

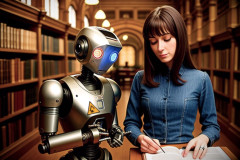

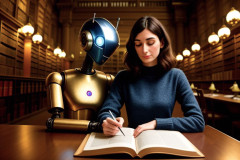

I had a studio photoshoot booked for last weekend. Unfortunately, my model was poorly and it was too late to book another. Consequently, I was in the unusual situation of actually having a free day, whilst also being in an experimental frame of mind. So I thought it might be rather good fun to try a model shoot, using an AI model rather than a real human one. Here’s what happened, starting with my hurriedly cobbled-together storyboard…

Storyboard

“Sabine told her sister, 25 year old Maria, a lovely but lonely postgrad computer-science student, that she needed to “make new friends“. So that’s exactly what Maria did but not quite in the way that Sabine anticipated. After many a night in her university’s Cybernetics Laboratory, Maria created a motley collection of AI-driven robots, from parts she lifted from various parts bins. Anticipating possible mechanical failures, Maria’s robots all share single entity, and they are all called Colin. She wants Colin to be her best friend, faithful only to her, respond only to her commands and to be 100% reliable. So Colin runs Linux. 🙂

“Colin is a hive-like collective, in a similar sense to the Borg in Star Trek. However, there is nothing complicated or organic about how Colin does this. A simple rsync daemon starts via systemd at boot, ensuring that every Colin instance accurately replicates and shares his entity, experience and knowledge with all the other ‘Colins’. So Colin’s personality transcends whatever outer-shell Maria happens to be accessing on any given day. As Maria teaches one Colin incarnation, she essentially teaches them all.

“Importantly, Maria does not want her creation brutalised or militarised. Colin’s systems are all strongly encrypted using a blockchain based on Maria’s own DNA. So no one else can steal or abuse him. Whilst Maria is deeply sceptical about AI, she is determined that whatever intelligence Colin does acquire as a result of her programming and training is founded on kindness and respect.

“All Maria’s creations carry statutory health and safety warning signs – with very good reason. Their limbs are essentially industrial robots. Even the weediest of Maria’s creations has a grip in excess of 1 kN, and could snap her neck like a match stick. Yet, despite all this power, Maria feels safe, comfortable and relaxed around her creations. In fact, she enjoys their company more than that of most humans.”

So that was the storyboard I hoped to create. Similar to the fictional TV character Max Headroom, my story is set a few minutes into the future. I also wanted to set it against the backdrop of on old type European university, where learning and discovery are more important than simply buying a degree. In particular, I wanted to capture the close and caring relationship that develops between Maria and Colin. I also wanted to convey the notion that this is a very serious young woman. One who puts the pursuit of knowledge above the pursuit of happiness. One who doesn’t work to live because she lives for her work.

Stable Diffusion

So, all I have to do now is simply copy and paste my storyboard into Stable Diffusion, right?

Wrong!

Contrary to popular belief, creating this sort of thing in AI is not as straightforward as one might think. Sometimes referred to as promptography, or prompt-engineering, actually making text-to-image generators such as Stable Diffusion produce one robot and one human is difficult enough. The actual “prompt” needed to produce these images was similar to:-

Two beings: A (cute metal robot) (((and))) its owner, an attractive slender 25 y.o. French woman called Maria (wearing long-sleeved-crop-top and cut-off jeans), reading a big old book (together), in a very large candle-lit Victorian library, Robot and Maria are best friends. ((Linux terminal screens in the background))

Making text-to-image AI do what you want it is something of a dark art. I will go into more detail in another article. Each engine has its own bizarre rules. With Stable Diffusion, certain key words can be emphasised using parenthesis. However if you use more then three sets of brackets, the results actually become less predictable. Making Maria a “French woman” kept her looks more constant. The “Two beings” at the start was to force it to draw to physical entities. The “cut off jeans” bit made her less dowdy, But so far it only actually produced one image of her wearing “cut-offs”. Strangely, it proved more successful at making her look a bit stylish than using the actual word “stylish”.

Then there’s the “negative prompt” – sometimes described as “anti-description”. This is where you list, separated by commas, all the things you don’t want in the image. Again parenthesis adds emphasis. And one does have to be blunt, almost to the point of seeming rude, in ones negative prompts. Again, I’ll return to this some other time.

Once one has created a set of images one then needs to scrutinise them very carefully. In this case I needed to check that the robot has robot hands and the human has human hands – ideally with the correct number of fingers. In fact to produce the forty or so images shown here took well over 1000 rejects. Then they still needed quite a bit of work in GIMP to mitigate some of the other AI errors.

Diabolical liberties

The images that most on-line interfaces produce for Stable Diffusion seem to generate horrible compression errors. Fortunately the JPEG smoothing tool in the latest GMIC v3.5.0 plugin for Gimp v3.0 mitigates this problem to some extent. The images are also very small – 768 x 512 pixels. GIMP can fairly successfully upscale these to a more reasonable 1536 x 1024 pixels. In most instances the images are reasonably usable. But really this is a horrendous bodge that would be entirely unacceptable for most photographers. Hopefully AI generators will soon deliver images of a more useful size.

Also, AI is rubbish at creating signs. So many of the graphics used in the images were lifted from the Wikipedia, tweaked where necessary in Inkscape and added in GIMP, often replacing Stable Diffusion’s somewhat feeble attempts at signage. And of course, one still needs to overlook Maria’s large number of hairstyle changes throughout the shoot – regular users of text-to-image AI will know that it is often rather difficult to make LLM’s produce sequences all showing the same person.

Nevertheless, whilst these images are far from perfect, I’m actually moderately pleased with the results. In some ways they actually achieved rather more that one might expect from a conventional photoshoot with a real human model. Stable Diffusion has many faults. But I simply love the robots it creates.

Will AI replace real human models?

To be clear, I’m not saying that AI will replace conventional photography with real human models. I’m certainly not planning to dismantle my studio any time soon. However. I think AI will have its uses in this context, particularly where one has an idea that one wants/needs to execute quickly, without all the palaver of actually setting up the studio and negotiating with a real human model. On the other hand, using AI did not save me any time. Correcting AI’s mistakes actually took me longer than it would if I had shot a human model.

So I see value in shooting both ways, where appropriate. To me, an AI shoot is like having a friendly and willing model living in the same building as me. She may not be the best model in the world. But she’s available, at the drop of a proverbial hat, 24/7/365. She doesn’t mind how long the shoot takes, how dangerous or uncomfortable it is, or what costumes she wears.

Perchance

One of the most annoying aspects about text-to-image AI are the appalling web interfaces that many providers offer. The least bad that I have encountered so far is the one over at perchance.org for Stable Diffusion. I particularly like Stable Diffusion – not least because it has remained steadfastly open source, unlike many of the others that have sold out to the LFITCs (large foreign information technology corporations) . In fact, I have mid-term plans/pipe-dreams to set up my own Stable Diffusion LLM (large language model) and try to train/fine-tune it from my own image library.

Future plans

I have around half a million of my own digital images (I went 100% digital back in the late 1990’s). There are an additional 32,000 scanned negatives and slides from the 1980s and 1990s. Almost all of these image files now have at least some metadata, neatly stored in a MariaDB (an open source MySQL database for GNU/Linux). This database currently provides the backend to my own Piwigo instance – which is a very good product in its own right. To which I’m adding both images and meta all the time. So at least I know that bit works! ![]()

Idea is that AI will achieve several things, perhaps, may be…

- Produce images to my own style and idiosyncrasies.

- Create a symbiosis between models I am shooting and material I have already shot. In fact I’ve been discussing the very concept with a regular model very recently.

- Create/develop new product/skills that I might be able to market in another context.

Of course, I realise from first hand experience that AI certainly ain’t what it’s cracked-up to be. And I definitely need to brush-up my programming skills, very significantly indeed, in order to pull this off. I don’t even know if my ideas are even practically viable. On the other hand, I think there are some very real possibilities with “AI”, especially for those who like to “think out of the box“.

In any event, AI is out there and it’s (mostly) free. Whilst text-to-image is still very suboptimal for the purposes I have in mind for it, I still intend to have a lot of fun with it, which ever way my ideas pan-out. ![]()

Some fun Interfaces to try

In order of complexity…

- https://perchance.org/image-generator-

- https://perchance.org/ai-photo-generator

- https://perchance.org/proimagegen

- https://perchance.org/image-generator-professional

Credits

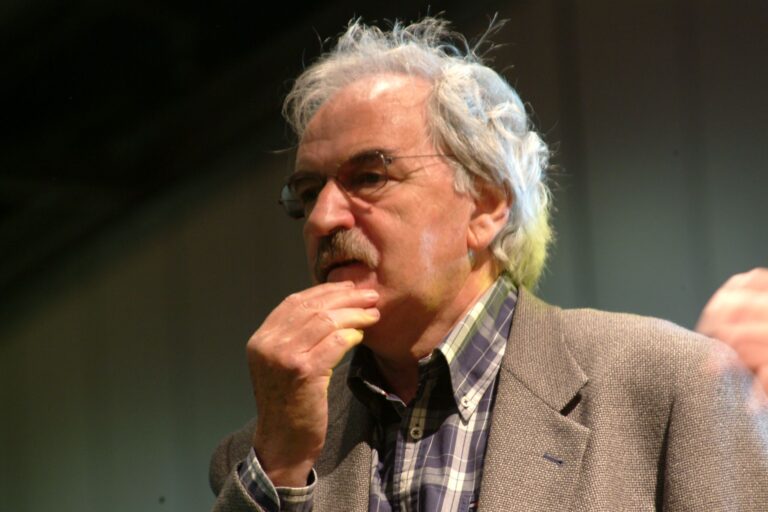

Thanks to real human model Estella Rose for giving me the idea. She is a fan of the movie character Lisa Frankenstein. The snap below was from a real shoot we did last year, where Estella Rose played the role of Lisa Frankenstein…