Is AI a serious threat to artists?

Is AI, a serious threat to artists? Or yet more IT industry bullshit? I’ve been in the IT industry for many years. I still look back with some amusement at a teacher who told us, a class of 8-9 year olds, that computers were, “Modern labour-saving machines“. Having spent most of my working life either programming or fixing the bloody things, that claim is somewhat at odds with my own personal experience.

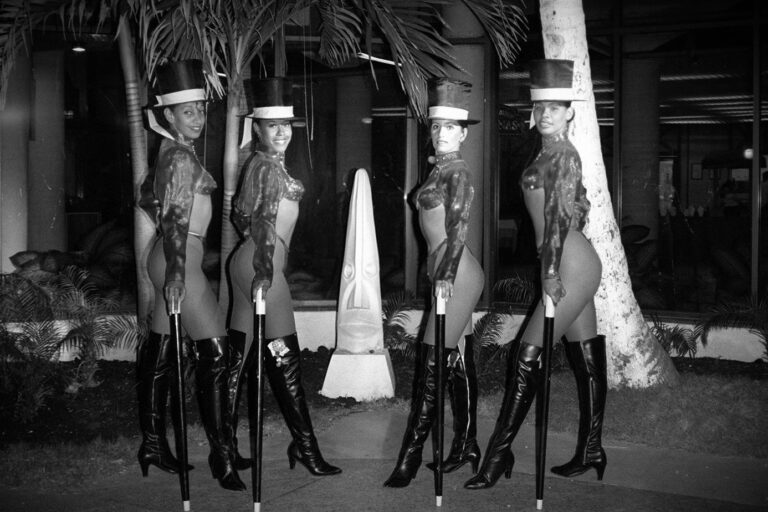

The featured image at the top of the page was supposed to be “Three attractive girls in animal print swimsuits driving red convertible in 1950’s style”. The convertible is a greeny grey metallic, and unrecognisable as a car. The girls, whilst attractive have terribly deformed hands, especially the poor lass on our right. Badly deformed hands is a common ‘feature’ of today’s AI generated images. And they are not driving it, they are sitting on it! Granted, some AI can do rather better than this. In fact the generator I used is usually better than this. Or to be more accurate, one has to look harder for the mistakes.

First contact

I wrote my first program, in BASIC, when I was 16 – well I was actually 17 when I finished it. In those days, computers were too expensive to have one per college. So we had to write our programs by hand on squared paper and send them off to the LEA HQ in Winchester for processing. Mine kept coming back with errors such as “SYNTAX ERROR ON LINE 10”. So I would correct it. Next week in would come back “SYNTAX ERROR LINE 20”. Took me a whole term just to draw a sodding circle!

Whilst it did very little to teach me about programming, it did alert me to the general hyperbole and bullshit that continues to surround and infest the IT industry today.

I was delighted in the 1980s to have my own first real(ish) computer. An Amstrad 1640 – when one with the “massive” 32 Megabyte HD and 640kB RAM – soon to be replaced with a string of 386, 486, pentium, RISC, ARM and various other CPUs. Obviously we have come quite a way since writing BASIC on maths paper.

Not so fast

Nevertheless, in that time, the IT industry continues to bombard us with advertising hype describing its super duper life changing products. Whilst some of its tech has indeed been quite useful, it’s seldom delivered the sheer scale of change that either its protagonists or its antagonists predicted. Indeed, those of us of a certain age can probably remember when we were told that television would kill books. It didn’t. UK book sales are their highest since 2012. Ironically it is broadcast TV that is being replaced.

But the industry loves to bombard us with clever-sounding bullshit terminology. Most of it is designed simply to part us from our money, combined with a little trumpet blowing to persuade us how effing clever the industry is. Here a few of the more egregious examples…

- Multimedia = Plays tunes.

- Virtual reality = Quite realistic, but only if you think Tom and Jerry cartoons are realistic.

- Neural networks =Lots of files with links that let you hop from one file to another.

- The cloud = Storing your data on someone else’s computer.

- Data mining = Knowing how to run an SQL query or use a search-engine.

- Any device with the word “Smart” in its description = A device which is likely to do one or more of the following: cost too much money; annoy; underperform; behave in an unpredictable manner; spy on you; catch fire; fail catastrophically when you most need it to work as advertised.

- Artificial Intelligence = Ability to perform lots of complicated sums relatively quickly and give the appearance of being quite intelligent sometimes.

- Synthetic stupidity = What happens when humans overestimate the power of AI, and it fcuks up again.

Well OK, I made-up that last one. 😉

My point

Despite the hyperbole, AI is not the same as real intelligence. It is still essentially a glorified adding-machine c/w pseudorandom number generator. and a very big database Granted, “AI” will certainly have its uses, especially in architecture, medicine, surgery and various forms of design and engineering. However, AI will not stop artists painting pictures. AI will not stop composers writing music. AI will not stop photographers taking photos. Artificial it may be. Intelligent it is not.

Or to put it another way, try to remember that video didn’t kill the radio star and home taping didn’t kill music, after all.

Further reading

Some readers my find my early run-ins with Craiyon moderately amusing…

Synthetic Stupidity, that got a giggle out of me.

AI is a tool. If you don’t have the words to describe the thing you want to create. It’s not happening.

Also, why are AI images so easy to identify?

The incorrect number of fingers is usually quite a giveaway, Rob, I find. Spurious limbs, impossible perspectives. I could go on, but I won’t. And even with the “best words” the bloody thing will often do whatever it feels like. Tell it to put a lass in a black dress and the chances are it will be some other colour. In fairness, it can be quite good fun – certainly brings out my ‘inner six-year-old’ at times. For example, it’s really good for creating ugly royalty-free pictures of public figures that one does not like – such as Bore Arse and Chump. But then, that’s not really much of a challenge for the machine, considering they are pretty bloody ugly to start off with. 🙂

AI is an idiot, but it’s an idiot that’s seen most of the internet.

Thus AI conclusively proves that it’s possible to be extremely well-read, yet crushingly pig-ignorant, all at the same time. 🙂

Artificial? Yes.

Intelligent? Seems AI still has a very long way to go, IMHO… 🙂